Currents, 2017

Interactive Installation

GradEx 2017, OCADu

Projection-Mapping, Interactive Installation, Processing, Kinect V2

We are living in the epoch of the Anthropecene that is defined by significant human influence on Earth’s geology and ecosystem. The destructive nature of anthropocentric tendencies has been exemplified by the near extinction of the Pacific bluefin tuna. Since the 1960’s, the rise in popularity of sushi in the west has caused a dramatic shift in the value of bluefin tuna.1 Formerly ground up and used as cat food, bluefin tuna is now valued over two hundred dollars per pound.

Currents is an interactive illustration of human influence in the disappearance of species and drastic changes to the environment. The migration pattern of the Pacific bluefin tuna becomes disrupted as bodies interact with the installation. A person’s movement is represented as currents moving across the Earth’s oceans. The Pacific bluefin then follows the movement of the human body and eventually vanishes. As more people enter the frame, the higher the number of fish are affected. As people exit the frame, the population of the Pacific bluefin tuna slowly replenishes thus restoring the species within its environment. The question remains, is a natural balance ever possible within the Anthropocene?

Role: Touchdesigner & UnrealEngine Developer

Organization: Public Visualization Lab (OCADu)

Technology: Processing, Kinect V2

Process and documentation

This piece was created as part of my self-directed thesis project at OCAD university. The goal of this project was to experiment with techniques beyond the scope of traditional graphic design, typically centred around print or web. The format of this work draws inspiration from the fields of generative art, projection mapping and computer vision. Processing was selected as a development framework as it had a large contributing community, documentation and library base for accommodating a variety of device peripherals. Currents was programmed using a Kinect V2 to capture depth data, and code compiled from Trent Brooks: Noise Ink, Hidetoshi Shimodaira: Optical flow, Thomas Diewald: Pixel Flow and Daniel Shiffman: The Nature of Code.

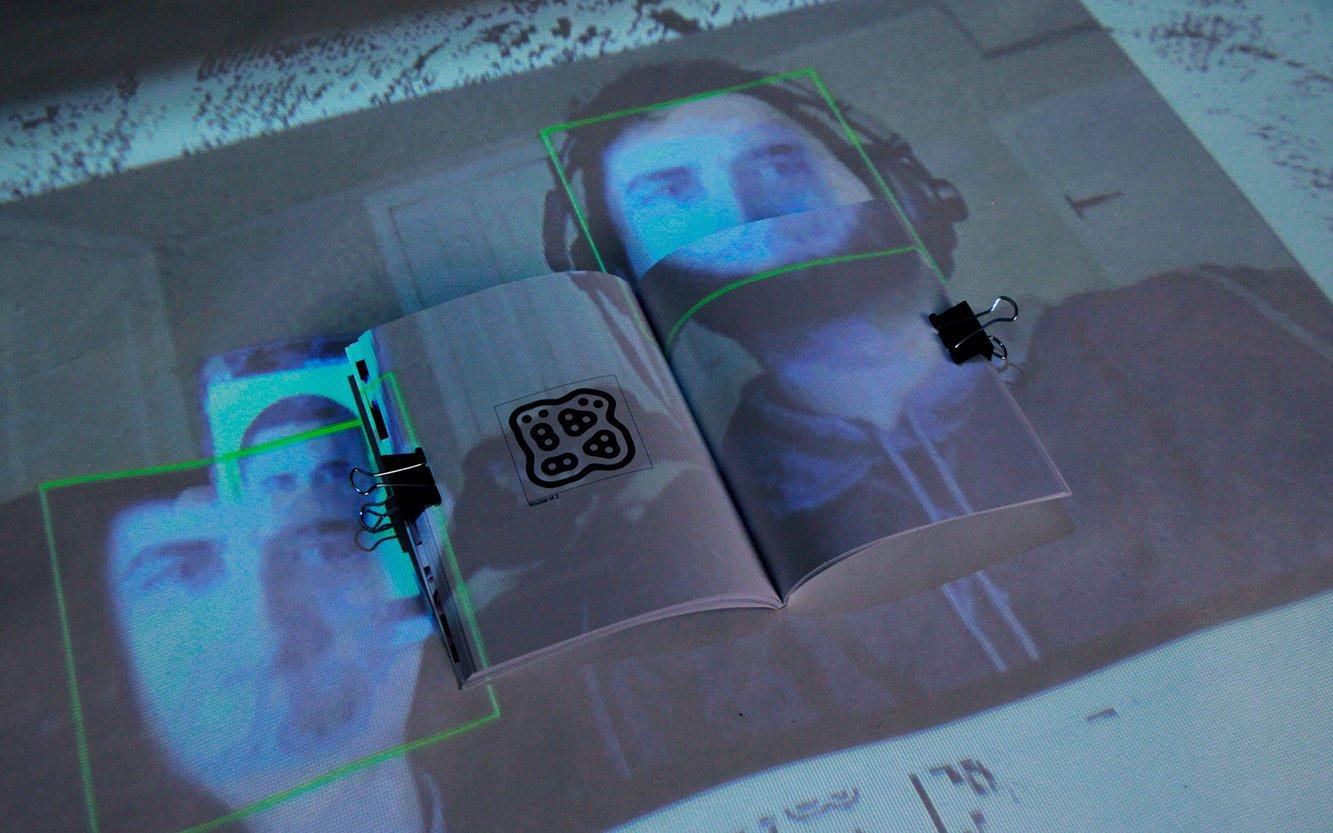

The gifs below document some of the early-stage prototypes. Some of the techniques focused on reproducing the experience of self-observation and illusions associated with mirrors. The example below is an experiment attempting to replicate the strange-face-in-the-mirror illusion. An illusion occurs when individuals in dim lighting see facial distortions and apparitions in mirrors. The viewer perceives objects that stay still as disappearing while moving objects gain focus. The other prototypes focused on experimenting with fundamental programming concepts and interactive applications of computer vision, facial recognition, colour detection, marker detection, and real-time manipulation of video.

The programming of the creature's movement and behaviour was modelled after Craig Reynolds’ boids simulation, as described in Daniel Shiffman’s The Nature of Code. Earlier prototypes used Conway's game of life as a starting point to initiate interaction between viewers and agents, the installation uses the Kinect V2 to capture the user's body and hand movements. The creatures/cells were created or destroyed with hand gestures. The later iterations utilize the Kinect’s depth cameras to interact with the agents that are flocking in paths.

Interactive documentation journal

The entire process and research for Currents was documented in a process journal and displayed as an augment protection mapping book. The first half of the book contains notes and research exploring the analogy of mirrors and anthropocentrism. The latter half consists of tests, examples and notes using computer vision. I used Processing and Reactivision installed on a Windows PC to read fiducial markers printed in the book. A projector and camera (Kinect V2) were mounted on tripods and then aligned with the markers.